Feature Effects in Machine Learning Models

Abstract

Supervised machine learning models are mostly black boxes. The method we propose tries to improve understanding of these black boxes. The goal is to find a way to quantify effect sizes of features. Average marginal effects are used in social sciences to determine effect sizes of logistic regression models. Applying this method to a machine learning model usually does not adequately represent the non-convex, non-monotonic response function. There are graphical methods like partial dependence plots or accumulated local effect plots that visualize the response functions but do not offer a quantitative interpretation. First, we use one of the latter methods to identify intervals within the response function is relatively stable. Second, we report some estimate of the feature effect separately for each interval. Our method determines the number of necessary intervals automatically.

Example: Bike Sharing Data

The following examples shows

how the method can help to understand heterogeneous feature effects.

We apply the method to the Bike Sharing dataset (Fanaee-T and Gama 2013)

which was further processed by Molnar (2018).

See table 1 for an overview of all the features.

The target cnt is the number of bicycles

lent by a bicycle sharing company per day.

The features comprise calendrical and meteorological information for each day.

| season | yr | mnth | holiday | weekday | weathersit | temp | hum | windspeed | cnt | days_since_2011 |

|---|---|---|---|---|---|---|---|---|---|---|

| SPRING | 2011 | JAN | 0 | SAT | MISTY | 8.18 | 80.6 | 10.8 | 985 | 0 |

| SPRING | 2011 | JAN | 0 | SUN | MISTY | 9.08 | 69.6 | 16.7 | 801 | 1 |

| SPRING | 2011 | JAN | 0 | MON | GOOD | 1.23 | 43.7 | 16.6 | 1349 | 2 |

| SPRING | 2011 | JAN | 0 | TUE | GOOD | 1.40 | 59.0 | 10.7 | 1562 | 3 |

| SPRING | 2011 | JAN | 0 | WED | GOOD | 2.67 | 43.7 | 12.5 | 1600 | 4 |

| SPRING | 2011 | JAN | 0 | THU | GOOD | 1.60 | 51.8 | 6.0 | 1606 | 5 |

Model Training

We use a linear model (R Core Team 2019), an SVM (Meyer et al. 2019), a random decision forest (Breiman et al. 2018) and gradient boosting (Greenwell et al. 2019). We compare the performance of all models and the performance of predicting the mean for each observation on a hold-out test set (see table 2). The linear model performs relatively well but we can improve by using a more complex machine learning model even without extensive tuning.

| mean(y) | lm | svm | rf | gbm | |

|---|---|---|---|---|---|

| mse | 3.75e+06 | 5.30e+05 | 4.33e+05 | 4.22e+05 | 4.12e+05 |

| rmsle | 5.93e-01 | 2.36e-01 | 2.44e-01 | 2.61e-01 | 2.30e-01 |

| rsq | 0.00e+00 | 8.59e-01 | 8.85e-01 | 8.88e-01 | 8.90e-01 |

Analysis

Now analyse how changing feature values influences

the predicted number of bikes.

We focus on the three numerical features temp (temperature in degree Celsius),

hum (humidity in percent) and windspeed (in kilometers per hour).

We apply our method to each of the complex models with default settings.

The output in R looks as follows:

## lm:

## temp hum windspeed

## 98.2 -13.7 -40.1

## SVM (temp)

## [-5.221, 19.26) [19.26, 32.498]

## 128.11 3.87

## SVM (hum)

## [18.792, 56.792) [56.792, 93.957]

## 7.19 -32.71

## SVM (windspeed)

## [2.834, 14.876) [14.876, 34]

## -22.7 -64.7

## RF (temp)

## [-5.221, 20.278) [20.278, 32.498]

## 104.5 -62.7

## RF (hum)

## [18.792, 64.667) [64.667, 93.957]

## -1.18 -31.97

## RF (windspeed)

## [2.834, 18.417) [18.417, 24.251) [24.251, 34]

## -16.46 -78.30 -1.64

## GBM (temp)

## [-5.221, 16.792) [16.792, 26.075) [26.075, 32.498]

## 124.2 13.9 -239.7

## GBM (hum)

## [18.792, 64.667) [64.667, 83.792) [83.792, 87.25) [87.25, 93.957]

## -3.86 -39.63 -227.00 91.19

## GBM (windspeed)

## [2.834, 8.584) [8.584, 22.959) [22.959, 24.251) [24.251, 34]

## -5.32e+01 -1.85e+01 -5.04e+02 6.60e-14The marginal effect of the linear model is equal to the model coefficients. So according to the model an increase of the temperature by 1° Celsius leads to a predicted increase of \(98.188\). rented bicycles per day. This seems plausible for an average day. The higher the temperature the more people are willing to go by bike. But one could easily imagine that a temperature rise on a hot day will make people less likely rent a bike to avoid physical exertion. This is exactly what the results of the complex models suggest. Below 20° the SVM predicts an increase of \(128.106\) bikes per day for an additional degree Celsius. Above 20° the effect becomes negative and very small \((3.867)\). The results of the random forest show two cutoff points. At around 13° the positive marginal effect becomes smaller in size and above 25° the effect is negative. The effect for gradient boosting is partitioned into four intervals. The effect of the three intervals below 27° are positive, above 27° it is negative, similarly to the results of the other two models. However, the absolute values of the effect fluctuate substantially for gradient boosting.

For humidity the linear model predicts a negative effect. For the SVM the effect is positive up to around 43%. Between 43% and 65% the effect is negative but smaller in size. Above 65% it is negative and four times bigger than in the previous interval. The results for the random forest and gradient boosting both show very small effects below roughly 65% humidity. Above this point both models predict a decrease of rented bicycles with rising humidity. The histogram (figure 1) explains why the effect for humidity is probably non-monotonic. Humidity usually is between 50% to 75%. Values outside this range indicate more extreme weather conditions. If uncommonly dry or wet air reduces people’s desire to ride a bike we will expect a positive effect on the number of rented bikes if humidity is below the familiar range, and a negative effect if it is above.

Figure 1: Histogram for feature humidity of the Bike Sharing dataset.

Wind makes cycling less attractive, so one associates higher wind speed with a reduced willingness to rent a bike. The linear model predicts a negative effect (\(-40.149\)). For both SVM and random forest our method proposes a negative effect that is constant over the whole feature distribution. Consequently, in the case of wind speed a linear model seems to be appropriate to represent the relationship. Gradient boosting shows a negative effect for five intervals, the reported values are not very stable, again.

In this exemplary application we showed that the linear model does not suffice to represent the varying response function types. A user may come to wrong conclusions about the number of rented bikes depending on the weather conditions of the day. The proposed method enables the user to make quantitative statements as if he was using a linear model while preserving the non-linear, non-monotonic relationship where necessary. Thus, he can combine a better performing model with a comprehensible interpretation. The results for the gradient boosting model are less convincing. Due to the stepped response function the model is less appropriate for making quantitative statements about the feature effect. The estimates fluctuate between high values and values close to zero.

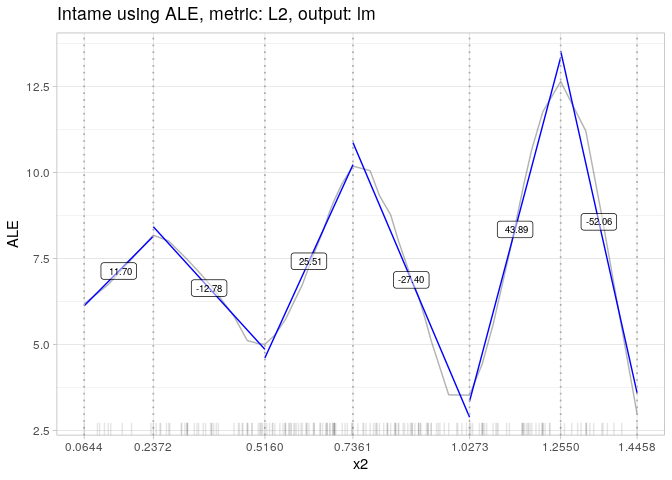

Figure 2: Bike sharing data. Results SVM.

Figure 3: Bike sharing data. Results gradient boosting.

Figure 4: Bike sharing data. Results random forest.

R package

The method is implemented in R supporting a variety of models out of the box. Source code and more information can be found on GitHub: https://github.com/BodoBurger/intame

References

Breiman, Leo, Adele Cutler, Andy Liaw, and Matthew Wiener. 2018. RandomForest: Breiman and Cutler’s Random Forests for Classification and Regression. https://CRAN.R-project.org/package=randomForest.

Fanaee-T, Hadi, and Joao Gama. 2013. “Event Labeling Combining Ensemble Detectors and Background Knowledge.” Progress in Artificial Intelligence. Springer Berlin Heidelberg, 1–15. https://doi.org/10.1007/s13748-013-0040-3.

Greenwell, Brandon, Bradley Boehmke, Jay Cunningham, and GBM Developers. 2019. Gbm: Generalized Boosted Regression Models. https://CRAN.R-project.org/package=gbm.

Meyer, David, Evgenia Dimitriadou, Kurt Hornik, Andreas Weingessel, and Friedrich Leisch. 2019. E1071: Misc Functions of the Department of Statistics, Probability Theory Group (Formerly: E1071), Tu Wien. https://CRAN.R-project.org/package=e1071.

Molnar, Christoph. 2018. Interpretable Machine Learning - a Guide for Making Black Box Models Explainable. Creative Commons. https://christophm.github.io/interpretable-ml-book/.

R Core Team. 2019. R: A Language and Environment for Statistical Computing. Vienna, Austria: R Foundation for Statistical Computing. https://www.R-project.org/.